Agent-Written Code Needs More Than Git

The former GitHub CEO just raised $60M to rebuild developer tooling for the agentic era. He might be right that git needs a rethink — I've been hacking around the same problems.

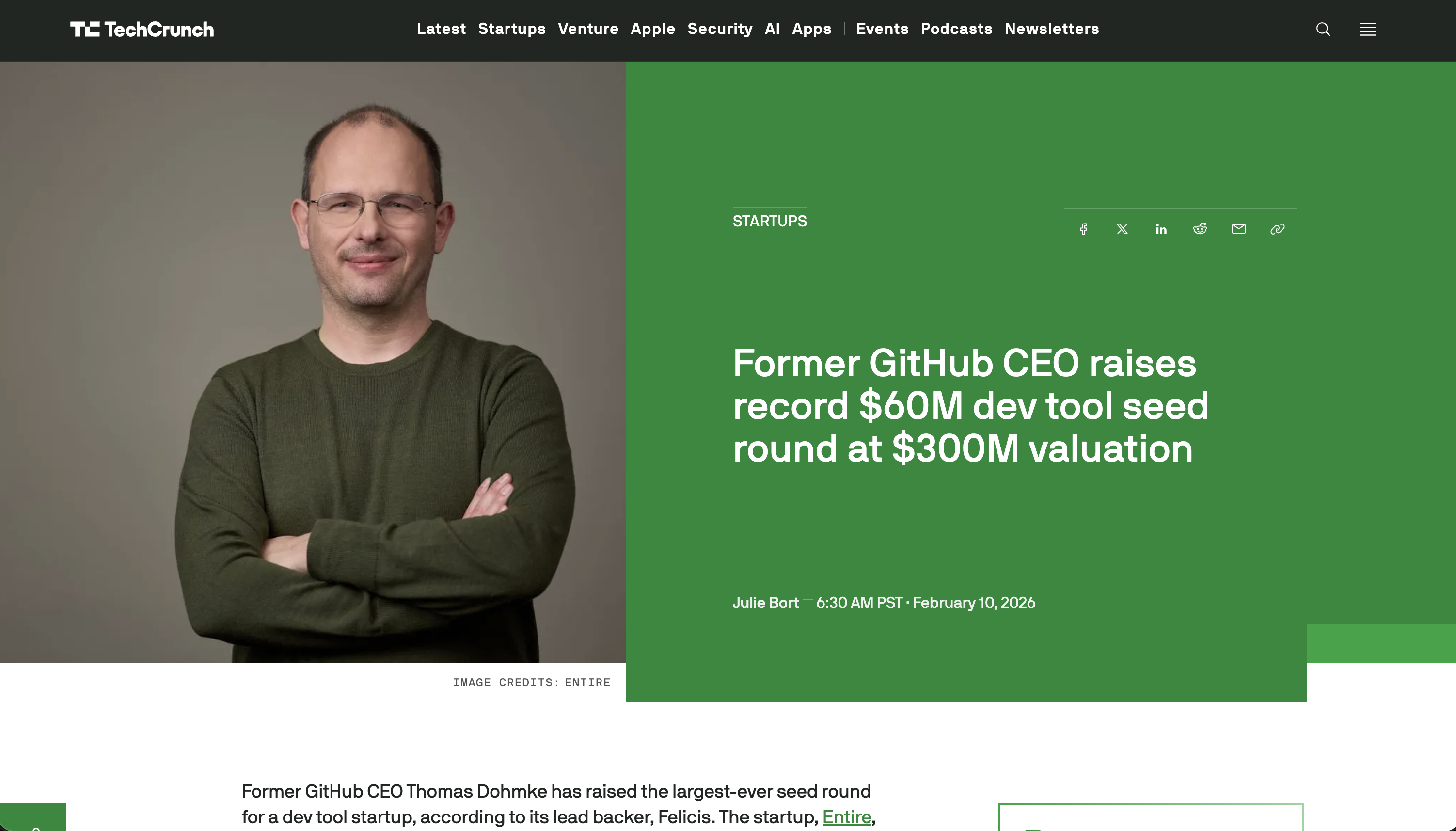

The former GitHub CEO just raised $60M at a $300M valuation for a seed round. For a CLI tool. Let that sink in.

Thomas Dohmke left GitHub and launched Entire, a developer platform built from scratch for the age of AI coding agents. It’s the largest seed round in dev tools history.

My first reaction was “that’s insane.” My second reaction was “wait, I’ve been solving the same problem with duct tape and hooks.”

The Problem Is Real

If you’re using AI agents like Claude Code or Gemini CLI daily — and I am — you’ve already felt it. Git was built for humans writing code. It assumes you know what you changed and why. It assumes your commit messages mean something. It assumes the person who wrote the code will remember what they were thinking.

AI agents break all of that.

When Claude Code rewrites a module for me, the commit message says what happened, but not why. There’s no trace of the conversation that led there. No record of the three approaches the agent considered and rejected. No way to know if the prompt was “refactor this for clarity” or “make this 10x faster and I don’t care about readability.”

The transcript — the actual reasoning behind the code — lives in a terminal session that vanishes when you close the tab.

My Duct Tape Solution

I ran into this a few weeks ago when I wanted to resume a Claude Code session after a reboot. The session was gone, and I had no idea what context the agent had when it made certain decisions.

So I did what any engineer would do: I wrote a hook. A simple Claude Code hook that links each commit to its session ID via a git trailer. Nothing fancy — just enough that I can trace a commit back to the conversation that produced it.

Combined with Mergify’s CLI for stacking PRs, it made my workflow usable. But it’s duct tape. It doesn’t capture the transcript, doesn’t track attribution, doesn’t handle multi-session work.

Which is exactly the gap Entire is going after.

What Entire Actually Claims to Be

Beyond the buzzwords in the press release, Entire is shipping three things:

- Checkpoints — an open source CLI that captures session context (prompts, transcripts, reasoning) alongside every commit, stored in git without polluting your history

- A semantic reasoning layer — meant to let multiple AI agents collaborate on the same codebase with shared context

- An AI-native UI — designed for agent-to-human collaboration rather than human-to-human

They’re not claiming to have a finished product — and they’re upfront about it. The Checkpoints CLI is the first concrete thing they’ve shipped, and it’s open source. The rest is where the $60M goes. Fair enough — let’s look at what actually exists.

Why $60M for This?

The bet isn’t that the current CLI is worth $300M. The bet is that the developer tooling stack needs to be rebuilt for a world where most code is written by agents, and the first company to nail the foundation wins.

Think about it: if 99% of code is agent-written in two years (which is where things are heading), then the code review, debugging, and understanding workflow we have today is fundamentally broken. You can’t review AI-written code the same way you review human-written code. You need the context — what was the agent trying to do, what constraints did it have, what alternatives did it consider.

That’s a platform opportunity, and $60M is the price of a credible attempt at it. Whether Entire is the one to build it is a different question — but the problem is real and urgent.

My Take

Dohmke knows exactly where GitHub’s limits are (he ran it). The investor list — Felicis, Madrona, Olivier Pomel — signals real conviction. And the core insight, that agent context is as important as the code itself, is something I believe in my bones because I’ve been hacking around it myself.

Their long-term ambition seems to involve moving beyond git. I’m more dubious about that part. Git is unkillable. My bet is that the reality will be hooks and duct tape around git for the next few years — and honestly, that’s probably enough. Git’s data model bends a lot further than people think before it breaks.

There’s a deeper tension, though. Entire’s model assumes humans are still in the loop — driving agents, reviewing output, caring about attribution. But that’s already not quite how it works. I haven’t written a line of code in months. I describe what I want, the agent writes it, I tell it to fix its mistakes, and it does. I’m not a developer anymore — I’m a director.

And the trajectory is obvious: agents won’t need directors much longer either. If agents are fully autonomous, who’s the audience for commit context and session transcripts? The agent doesn’t need to remember what it was thinking — it can just re-derive it. The human who never touched the code doesn’t need line-level attribution.

That could go either way for Entire. Maybe full autonomy makes provenance more critical — precisely because no human was involved, you need a machine-readable audit trail. Or maybe it makes the whole problem vanish — agents that manage their own context don’t need git hacks to preserve it.

Either way, if you’re leading an engineering team right now, you should be thinking about how you’ll audit, understand, and trust the code your agents produce — whether there’s a human in the loop or not.

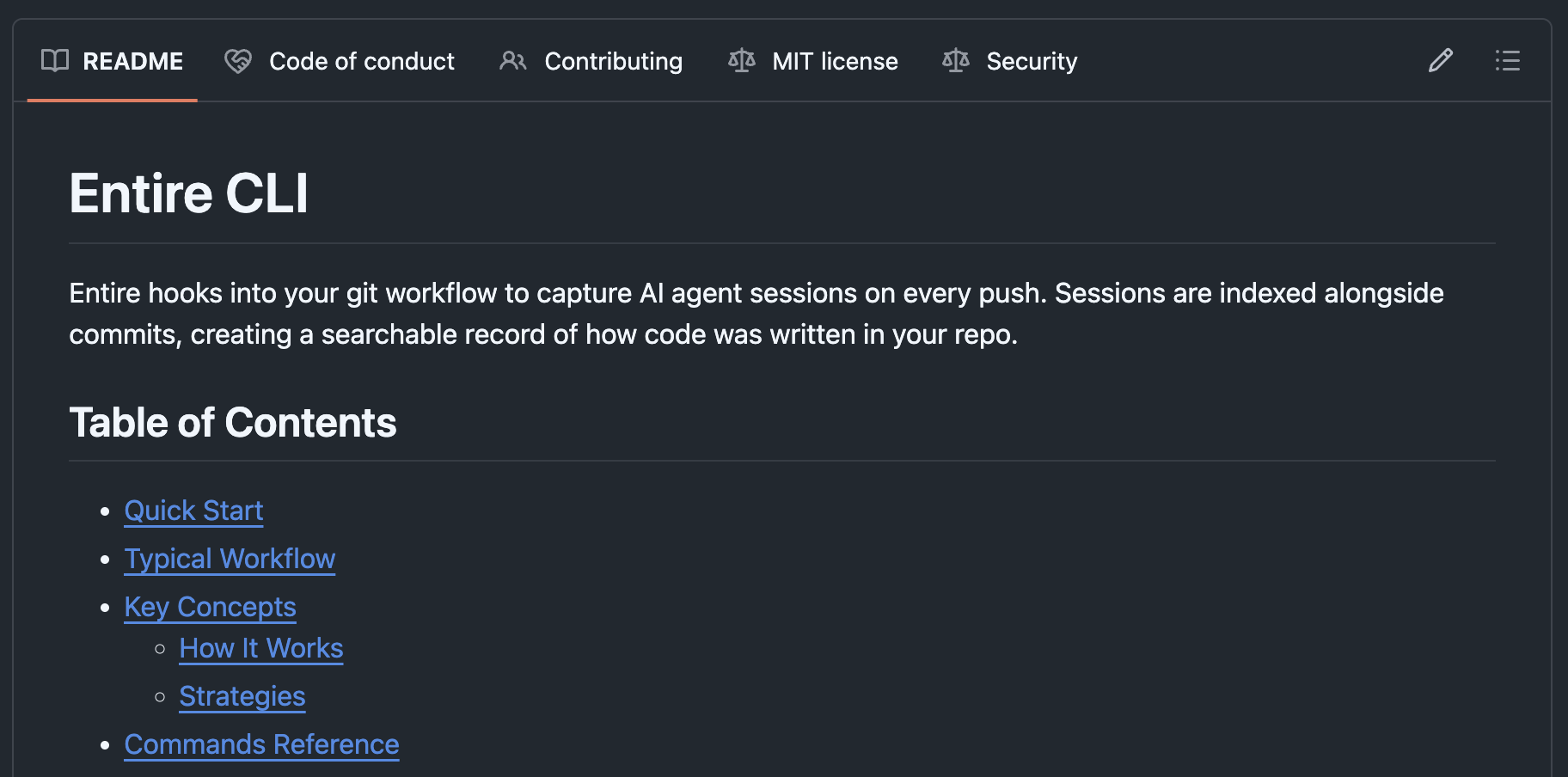

Next up, I’ll dig into the actual source code and show you how Entire’s Checkpoints CLI works under the hood. It’s a clever piece of engineering that abuses git internals in ways I genuinely admire.

Related posts

How Entire Works Under the Hood

I dug into Entire's open source Checkpoints CLI. It's a clever abuse of git internals — shadow branches, orphan metadata, and a session state machine. Here's how it works.

Read more →

GitHub Is Thinking About Killing Pull Requests

Code generation got cheap. Review didn't. That asymmetry is destroying open source faster than any AI policy can fix.

Read more →